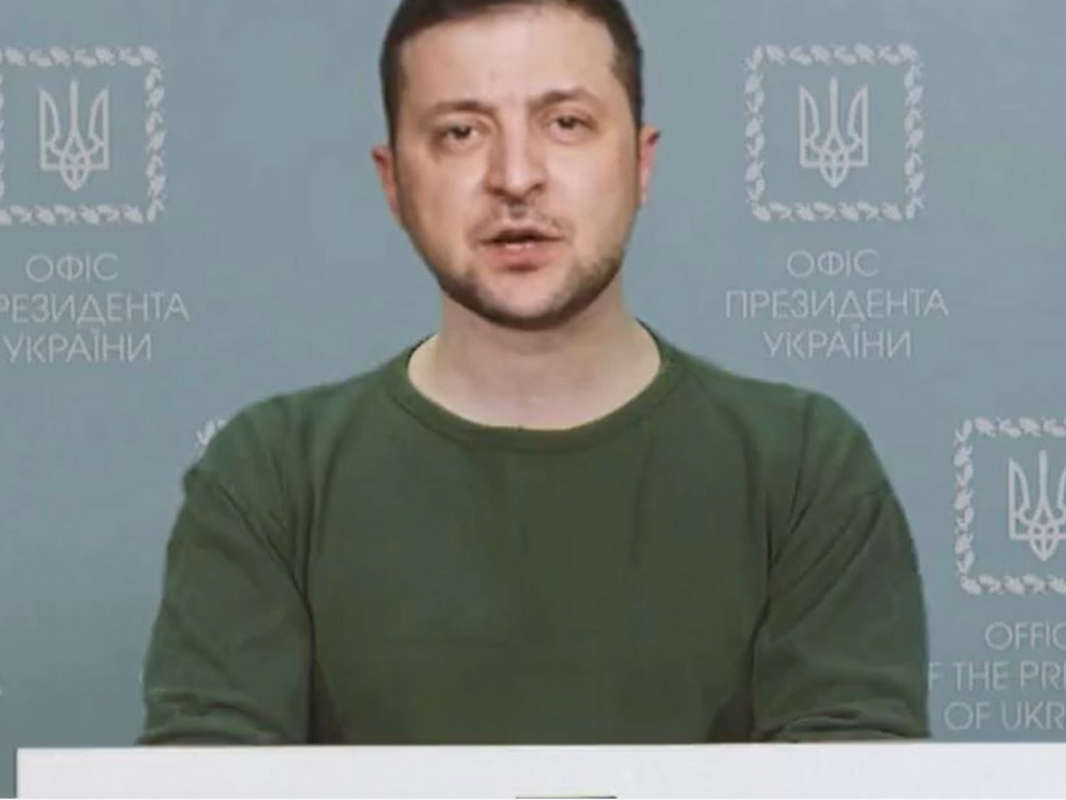

A faked video of the Ukrainian president telling people to surrender is being shared online.

The video shows what appears to be Volodymyr Zelenskyy standing behind a podium saying:

"It turned out to be not so easy being the president."

He goes on to declare that he has "decided to return Donbas" in eastern Ukraine to Russia and that his army's efforts in the war "have failed".

The short message ends with:

"My advice to you is to lay down arms and return to your families. It is not worth it dying in this war. My advice to you is to live. I am going to do the same."

But the video is a deepfake and it is easy to spot.

A deepfake usually involves an image or video in which a person or object is visually or audibly manipulated to say and do something that is fabricated.

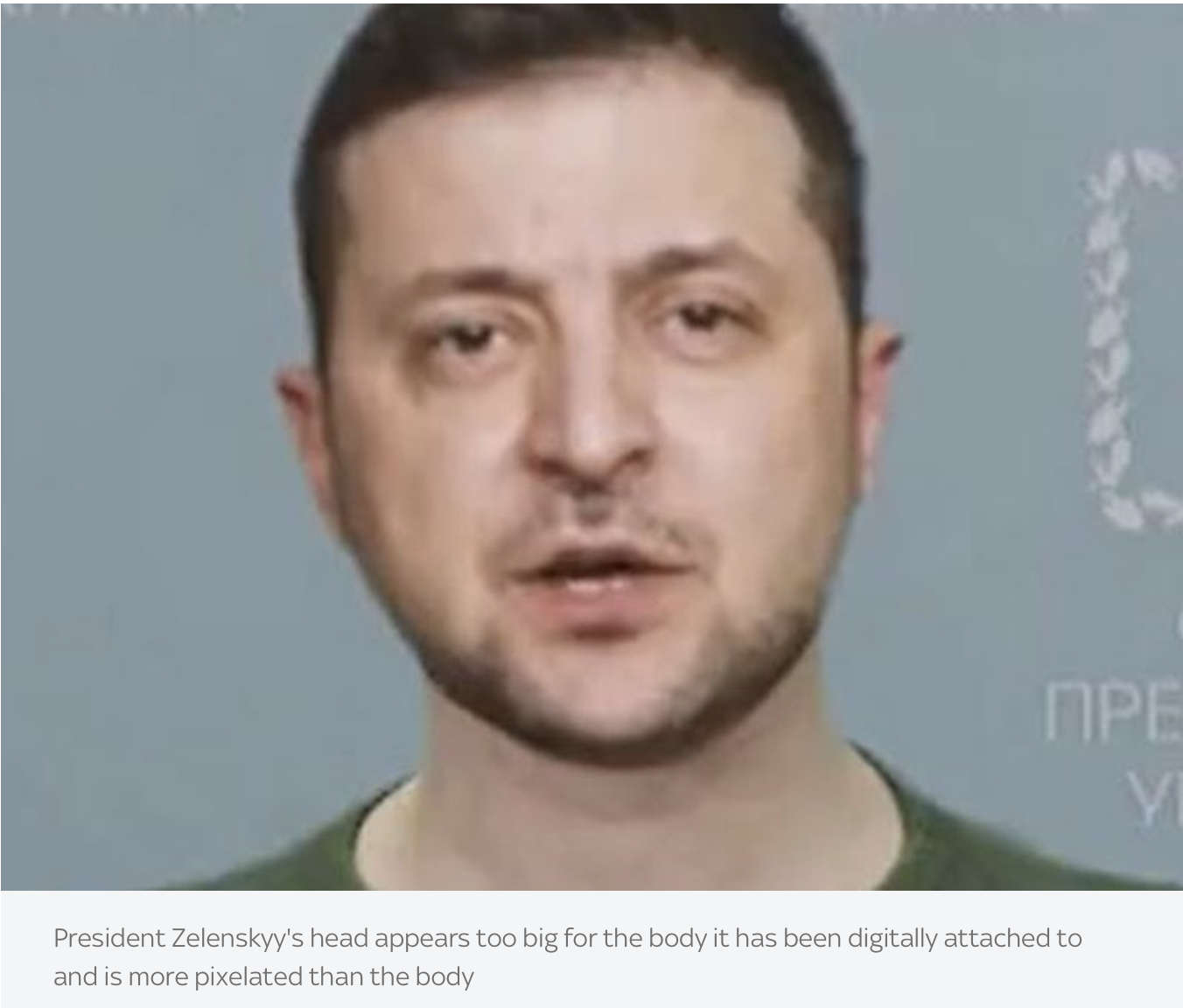

In the clip being shared online, President Zelenskyy's head is too big for the body it has been digitally attached to. It is also lit differently and sits at an awkward angle.

You can also see a higher level of pixelation around the fake Zelenskyy's head compared to its body.

A translator working for Sky News said that the voice in the fake video was deeper and slower than Mr Zelenskyy's normal voice.

The real Zelenskyy later issued his own comment on the deepfake on his official Instagram account.

In it he said:

"Good day. As for the latest childish provocation with advice to lay down arms, I only advise that the troops of the Russian Federation lay down their arms and return home.

"We are already home, we are defending our land, our children, our families. So, we are not going to lay down any arms until our victory."

The selfie-style real presidential video was posted with the message: "We are at home and defending Ukraine."

The deepfake video seems to have been broadcast with a written message on TV24, a Ukrainian TV channel which was reportedly hacked.

An archived version of the news site shows the message was live on the site on Wednesday.

Earlier this month, the Ukrainian Centre for Strategic Communications and Information Security issued a warning on Facebook about deepfakes of the president.

It said:

"Imagine seeing Vladimir Zelensky on TV making a surrender statement. You see it, you hear it - so it’s true. But this is not the truth. This is deepfake technology.

"This will not be a real video, but created through machine learning algorithms."

Professor Sandra Wachter, an expert on law and ethics of AI, Big Data and robotics at the University of Oxford, told Sky News:

"Spreading wrong or misleading information about political leaders is as old as humanity itself."

She added:

"We now have technology that can fake pictures, video and audio, and that we have the Internet where such information can spread more widely which makes it distinct from historical examples.

"Luckily, the technology is not yet advanced enough to create a fake that is undetectable. In the clip of Zelenskyy being circulated, there are clearly facial 'artifacts' (visible traces in the image left behind through the process of distorting the clip) as well as audio and scaling issues. Yet, as always technology is evolving fast and we must not sleepwalk into the situation."

Man Admits To Damaging Cars In Bognor

Man Admits To Damaging Cars In Bognor

Two Men Arrested In Connection With Brighton Rape

Two Men Arrested In Connection With Brighton Rape

Appeal Following Assault In Horsham Shop

Appeal Following Assault In Horsham Shop

Appeal After Arson At Gym In Burgess Hill

Appeal After Arson At Gym In Burgess Hill

Two Men Sought In Connection With Brighton Rape

Two Men Sought In Connection With Brighton Rape

Councillors Support Baby Box Partnership With Charities

Councillors Support Baby Box Partnership With Charities

Brighton And Hove Bus Fare Cap Bid Foiled By Cost

Brighton And Hove Bus Fare Cap Bid Foiled By Cost

New Medical Centre Scoping Exercise Agreed By Wealden Council

New Medical Centre Scoping Exercise Agreed By Wealden Council

'Out Of This World' Ideas Put Forward For Future Of Brighton i360

'Out Of This World' Ideas Put Forward For Future Of Brighton i360

New Fire Engines For West Sussex

New Fire Engines For West Sussex